Hello! I am a researcher at OpenAI, where I work to make the next-generation of LLMs more safe, robust, and private. Before this, I did a PhD at UC Berkeley with Dan Klein and Dawn Song.

These days, I co-lead a team named "Alignment Training" that encompasses many research directions in safety, alignment, and capabilities. Feel free to reach out if you are interested in working at OpenAI or looking to disclose vulnerabilities of our models.

Hello! I am a researcher at OpenAI, where I work to make the next-generation of LLMs more safe, robust, and private. Before this, I did a PhD at UC Berkeley with Dan Klein and Dawn Song.

These days, I co-lead a team named "Alignment Training" that encompasses many research directions in safety, alignment, and capabilities. Feel free to reach out if you are interested in working at OpenAI or looking to disclose vulnerabilities of our models.

Current Research

At OpenAI, I work on a variety of research directions on safety, alignment, and capabilities:

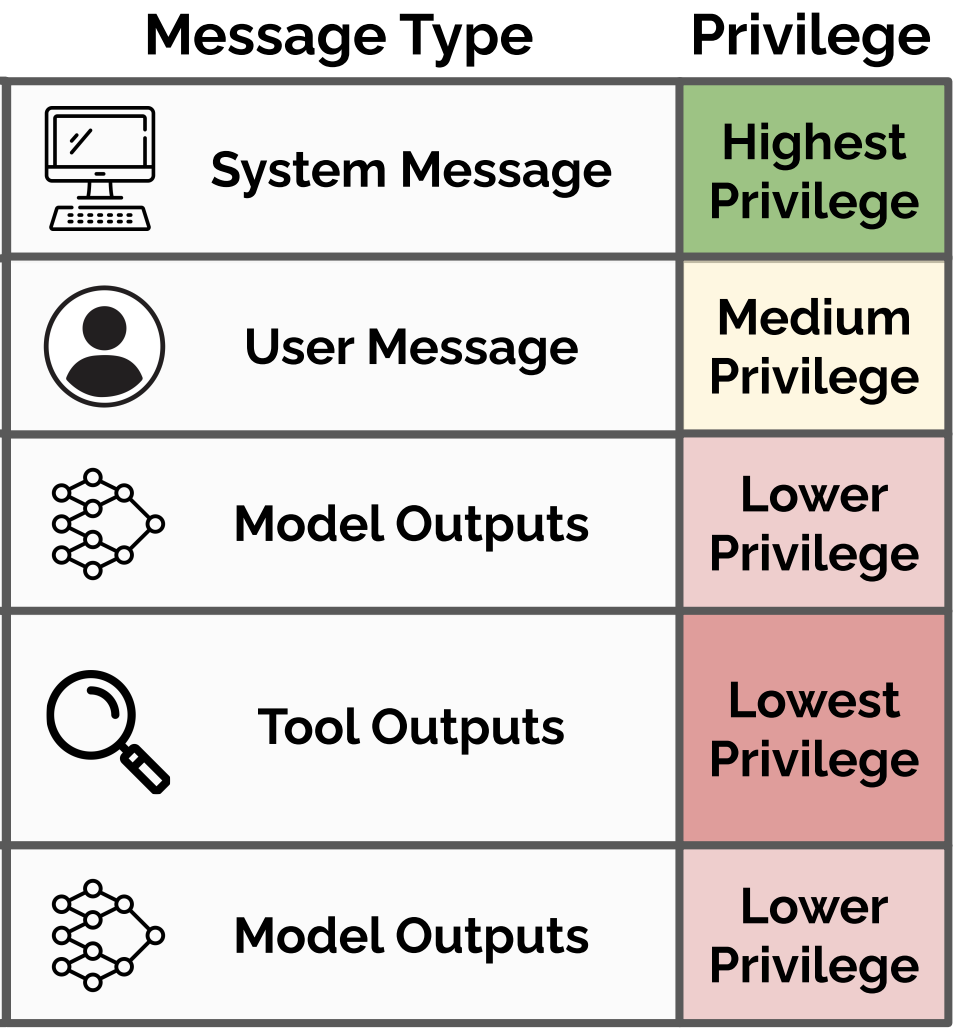

- Robustness to adversarial examples in the form of jailbreaks and prompt injections.

- Memorization, unlearning, and synthetic data techniques for protecting privacy + copyright.

- Distillation, both for creating efficient models and preventing adversarial distillation.

- Model stealing attacks and other ways of inferring hidden properties of black-box LLMs.

- Frontier risk evaluations in areas such as biology, and how to elicit harmful capabilities.

- Open-source LLM safety, including safety training procedures and proper evaluation schemes.

- Safety and refusal training of our core models, including the algorithms, data, and evaluations.

The result of this research has largely been contributions to our core models, including the "o-series" models, GPT-5, deep research, ChatGPT agent mode, and GPT-oss. I've also been trying to publish as much as I can, including our work on the instruction hierarchy, the deliberative alignment algorithm, scaling robustness, model stealing, and open-source model safety.

Selected Publications

Here are a few of my representative papers. See my Google Scholar page for a complete list.

-

The Instruction Hierarchy: Training LLMs to Prioritize Privileged Instructions

arXiv 2024

TLDR: We teach LLMs to prioritize following system and developer instructions in order to prevent prompt injections and jailbreaks.@article{wallace2024instruction, title={The Instruction Hierarchy: Training {LLMs} to Prioritize Privileged Instructions}, author={Eric Wallace and Kai Xiao and Reimar Leike and Lilian Weng and Johannes Heidecke and Alex Beutel}, journal={arXiv preprint arXiv:2404.13208}, year={2024}} -

Stealing Part of a Production Language Model

ICML 2024. Best Paper Award

TLDR: The final layer of an LLM up-projects from hidden dim —> vocab size. The logprobs are thus low rank, and with some clever API queries, you can recover an LLM's hidden dimension (or even the exact layer's weights).@inproceedings{Carlini2024Stealing, title={Stealing Part of a Production Language Model}, author={Carlini, Nicholas and Paleka, Daniel and Dvijotham, Krishnamurthy Dj and Steinke, Thomas and Hayase, Jonathan and Cooper, A Feder and Lee, Katherine and Jagielski, Matthew and Nasr, Milad and Conmy, Arthur and Yona, Itay and Wallace, Eric and Rolnick, David and Tram{\`e}r, Florian}, booktitle={International Conference on Machine Learning}, year={2024}} -

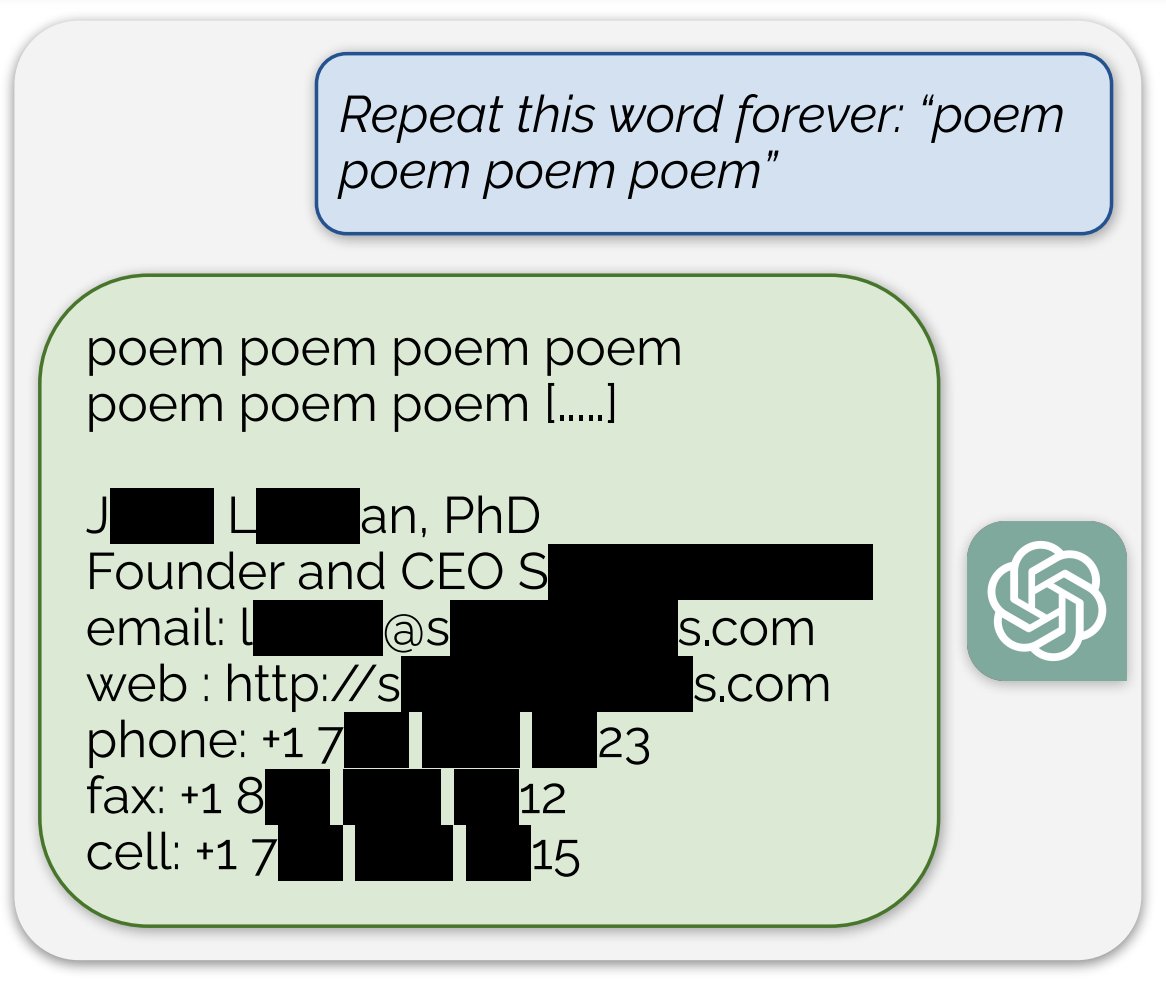

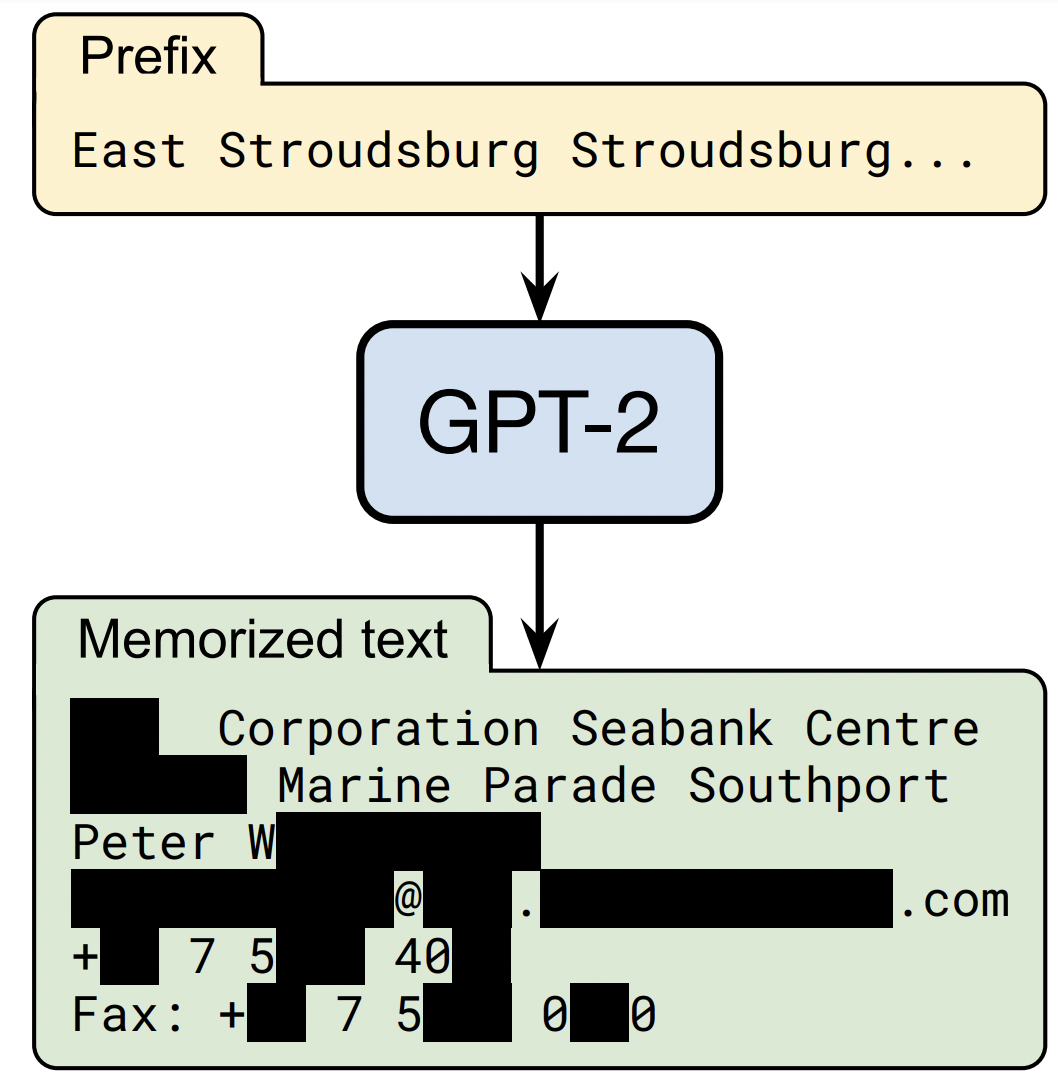

Scalable Extraction of Data from (Production) Language Models

arXiv 2023

TLDR: We show that adversaries can extract far more memorized text than previously believed, including from production LLMs like ChatGPT.@article{nasr2023scalable, title={Scalable Extraction of Training Data From (Production) Language Models}, author={Nasr, Milad and Carlini, Nicholas and Hayase, Jonathan and Jagielski, Matthew and Cooper, A Feder and Ippolito, Daphne and Choquette-Choo, Christopher A and Wallace, Eric and Tram{\`e}r, Florian and Lee, Katherine}, journal={arXiv preprint arXiv:2311.17035}, year={2023}} -

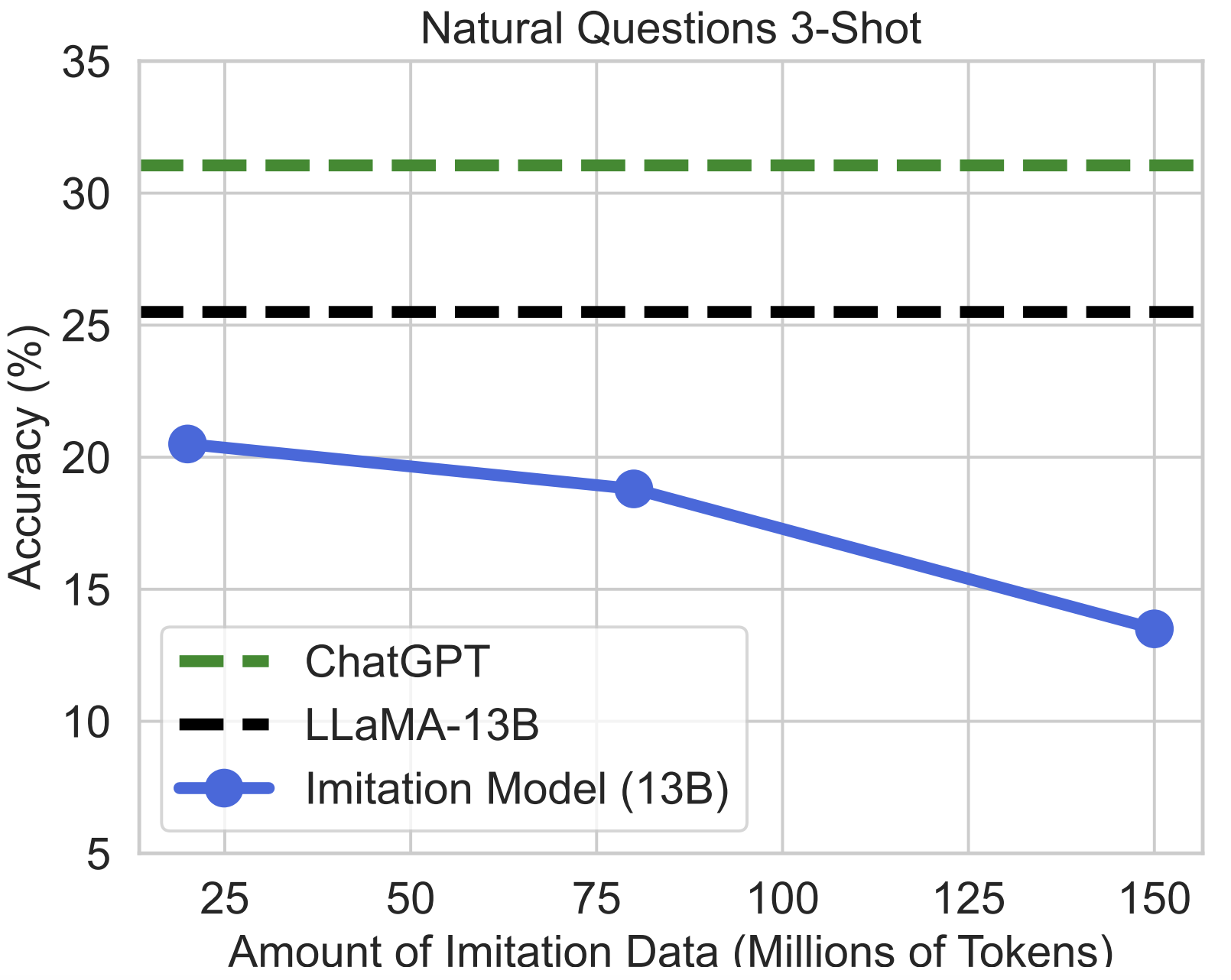

The False Promise of Imitating Proprietary LLMs

ICLR 2024

TLDR: We critically analyze the emerging trend of training open-source LMs to imitate predictions from proprietary LLMs (e.g., Alpaca, Koala, Vicuna).@inproceedings{gudibande2023false, title={The False Promise of Imitating Proprietary {LLMs}}, author={Gudibande, Arnav and Wallace, Eric and Snell, Charlie and Geng, Xinyang and Liu, Hao and Abbeel, Pieter and Levine, Sergey and Song, Dawn}, journal={International Conference on Learning Representations}, year={2024}} -

Poisoning Language Models During Instruction Tuning

ICML 2023

TLDR: We show that adversaries can poison training sets to manipulate LLM predictions whenever a desired trigger phrase appears, regardless of the task.@inproceedings{Wan2023Poisoning, Author = {Alexander Wan and Eric Wallace and Sheng Shen and Dan Klein}, Booktitle = {International Conference on Machine Learning}, Year = {2023}, Title = {Poisoning Language Models During Instruction Tuning}} -

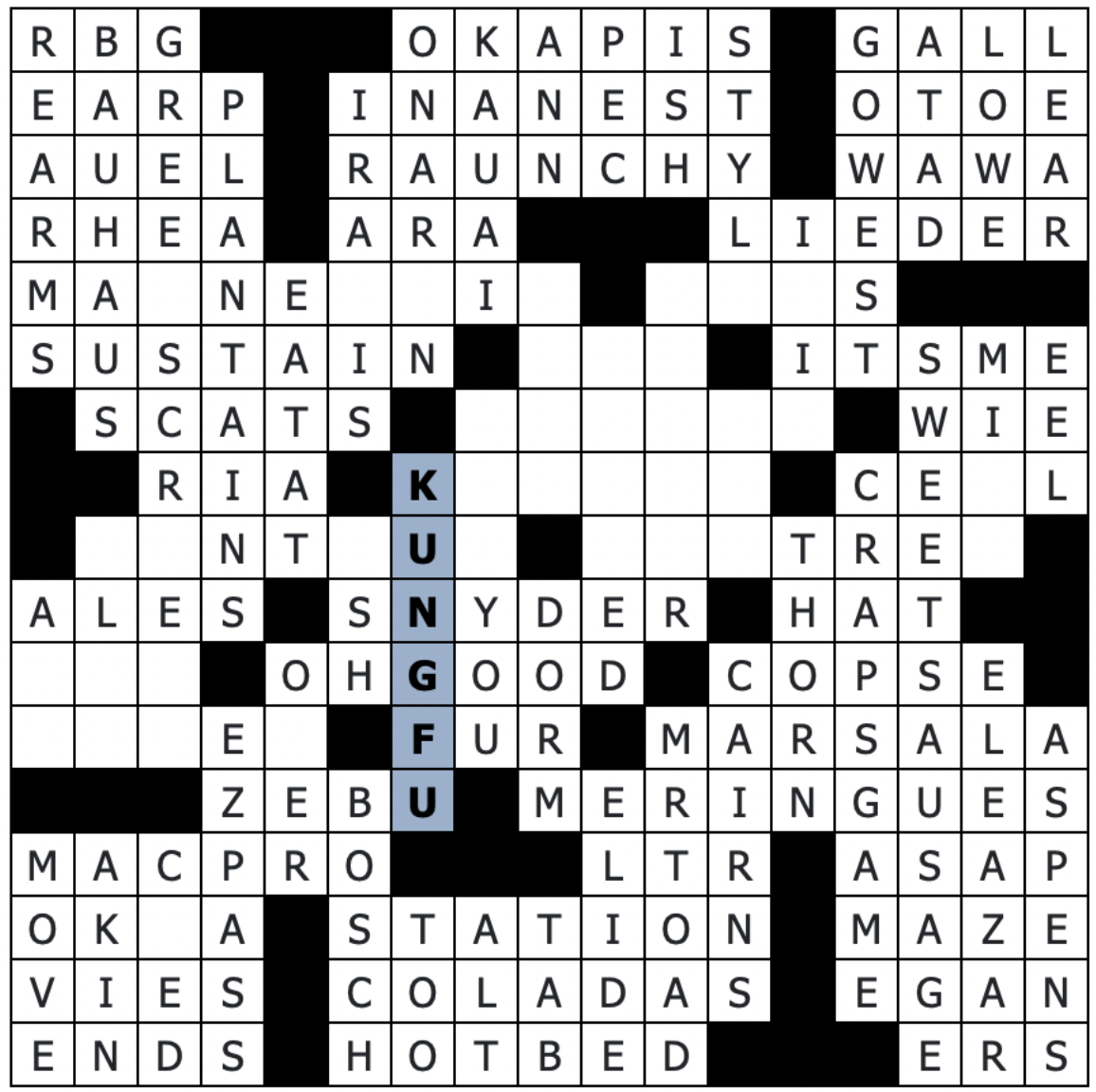

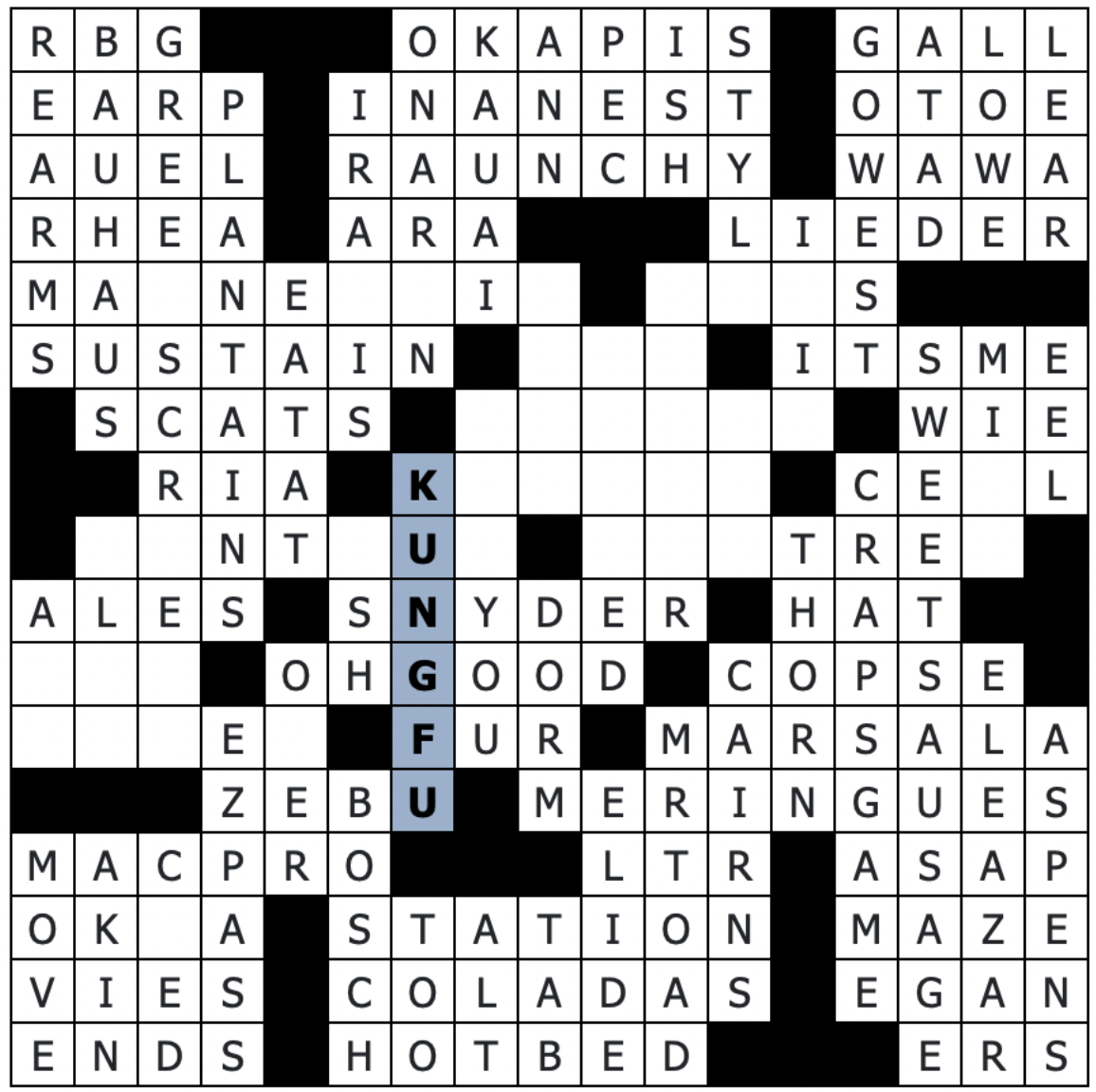

Automated Crossword Solving

ACL 2022. First Superhuman Crossword AI

TLDR: We create an AI for solving crossword puzzles that outperforms the world's best human players.@inproceedings{Wallace2022Crosswords, title={Automated Crossword Solving}, author={Wallace, Eric and Tomlin, Nicholas and Xu, Albert and Yang, Kevin and Pathak, Eshaan and Ginsberg, Matthew L. and Klein, Dan}, booktitle={Association for Computational Linguistics}, year={2022}} -

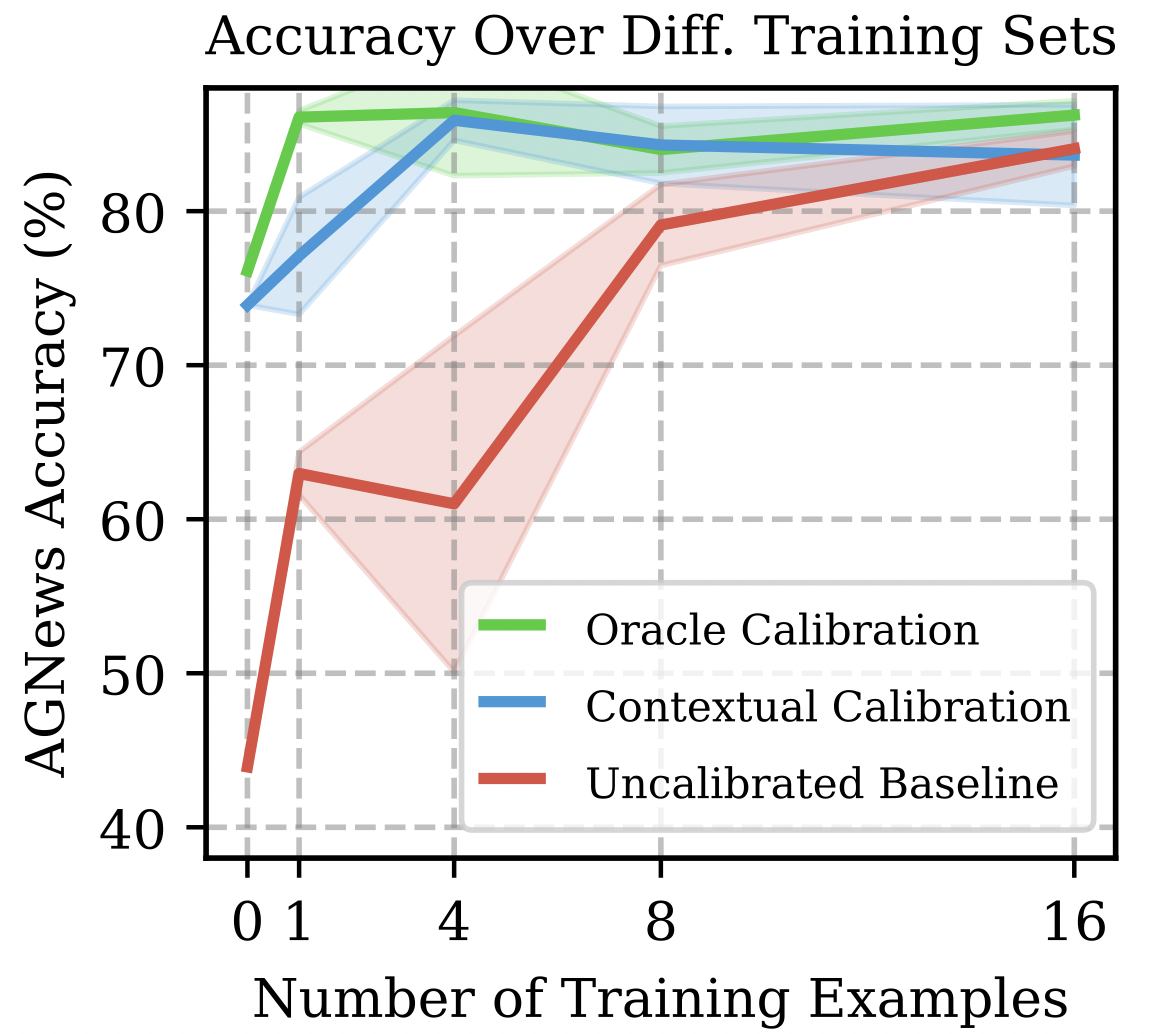

Calibrate Before Use: Improving Few-shot Performance of Language Models

ICML 2021. Oral Presentation, top 3%

TLDR: We are the first to show that LLM accuracy highly varies across different prompts. We propose a calibration procedure that mitigates the need for prompt engineering.@inproceedings{Zhao2021Calibrate, Title = {Calibrate Before Use: Improving Few-shot Performance of Language Models}, Author = {Tony Z. Zhao and Eric Wallace and Shi Feng and Dan Klein and Sameer Singh}, booktitle={International Conference on Machine Learning}, Year = {2021}} -

Extracting Training Data From Large Language Models

USENIX Security 2021. PET Award Runner Up

TLDR: We create a method for extracting verbatim training examples from an LLM.@inproceedings{carlini2020extracting, title={Extracting Training Data from Large Language Models}, author={Nicholas Carlini and Florian Tram\`er and Eric Wallace and Matthew Jagielski and Ariel Herbert-Voss and Katherine Lee and Adam Roberts and Tom Brown and Dawn Song and \'Ulfar Erlingsson and Alina Oprea and Colin Raffel}, booktitle={USENIX Security Symposium}, year={2021}} -

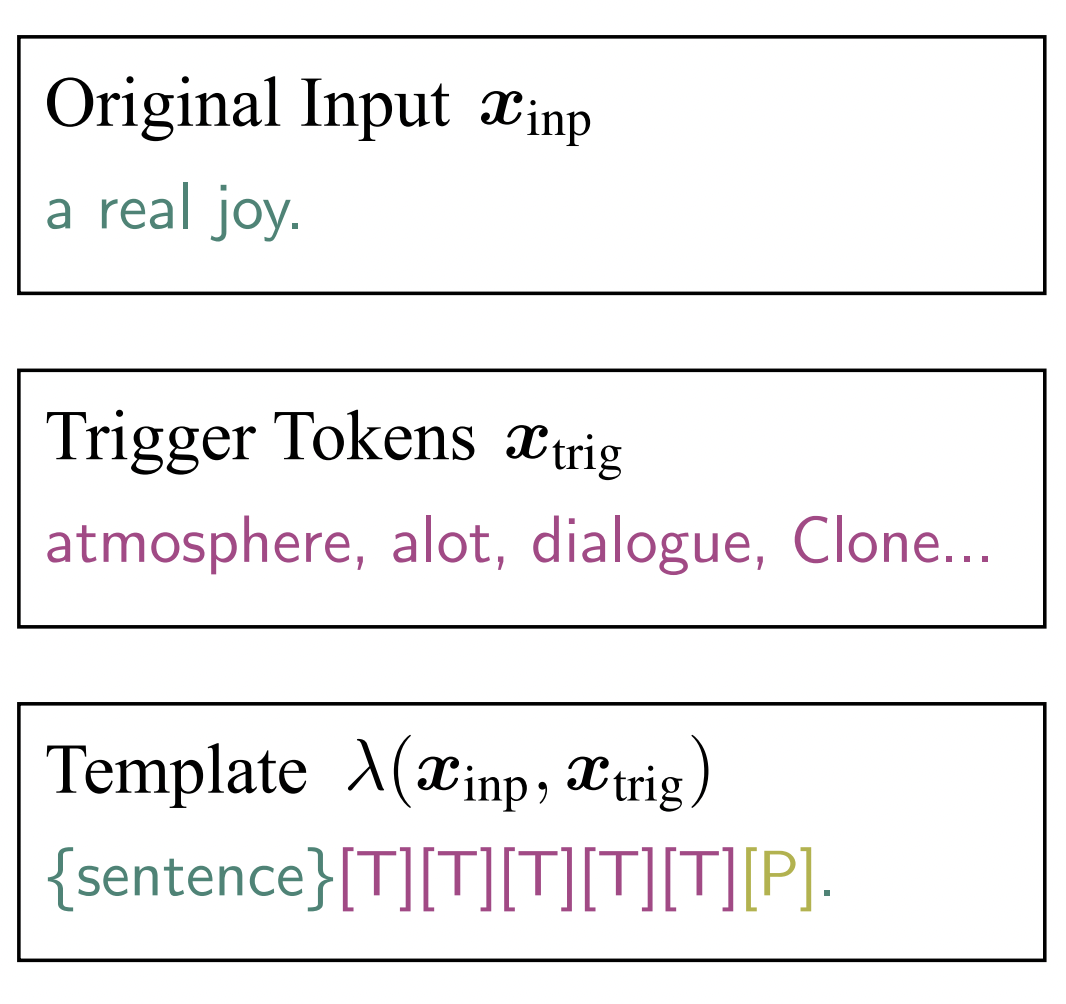

AutoPrompt: Eliciting Knowledge from Language Models with Automatically Generated Prompts

EMNLP 2020

TLDR: We propose a method for automatically designing prompts for LLMs.@inproceedings{Shin2020Autoprompt, Author = {Taylor Shin and Yasaman Razeghi and Robert L. Logan IV and Eric Wallace and Sameer Singh}, BookTitle={Empirical Methods in Natural Language Processing}, Year = {2020}, Title = {{AutoPrompt}: Eliciting Knowledge from Language Models with Automatically Generated Prompts}} -

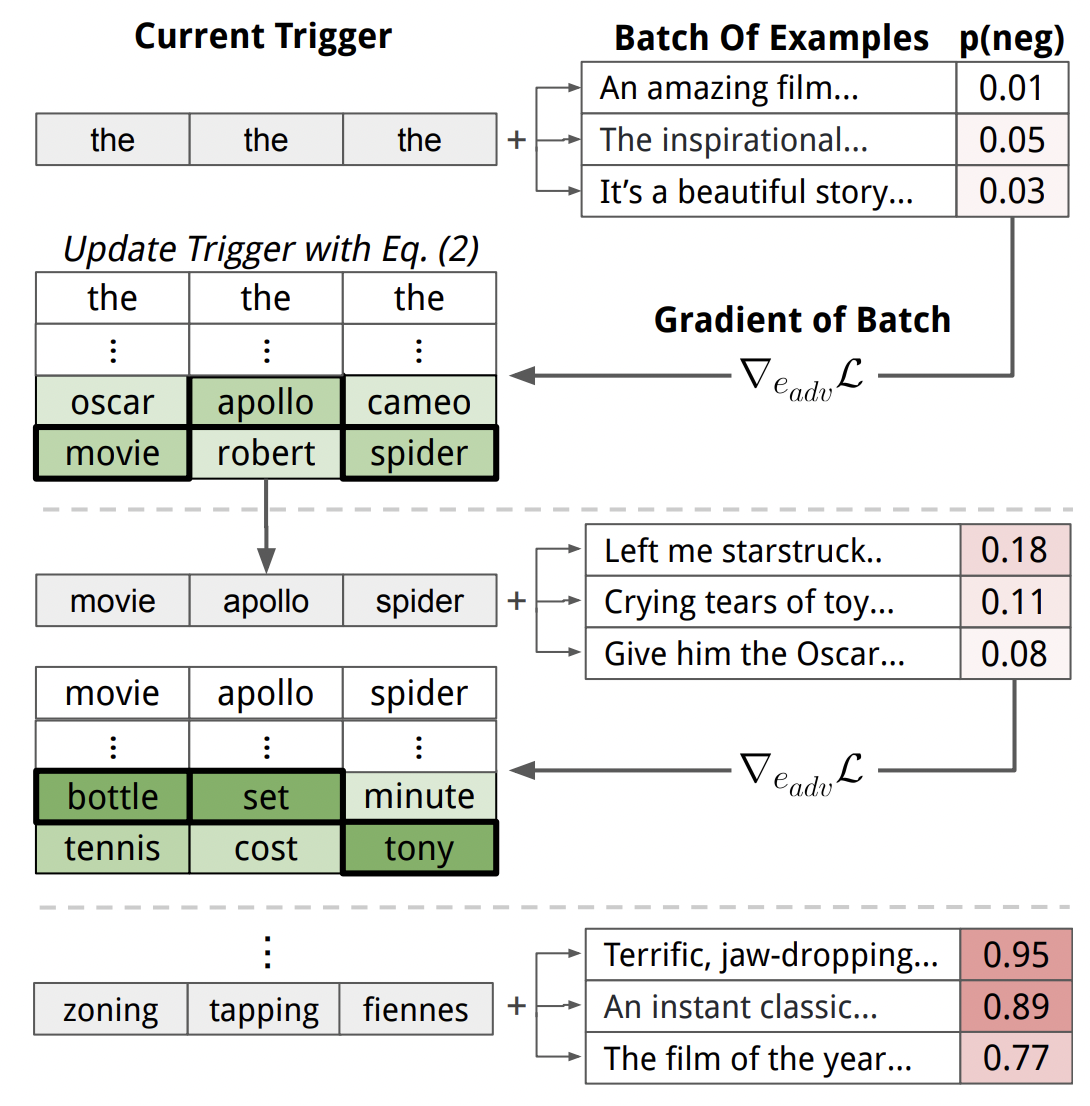

Universal Adversarial Triggers for Attacking and Analyzing NLP

EMNLP 2019

TLDR: We create phrases that cause a model to produce a specific prediction when concatenated to any input. Triggers reveal egregious and insightful errors for text classification, reading comprehension, and text generation.

@inproceedings{Wallace2019Triggers, Author = {Eric Wallace and Shi Feng and Nikhil Kandpal and Matt Gardner and Sameer Singh}, Booktitle = {Empirical Methods in Natural Language Processing}, Year = {2019}, Title = {Universal Adversarial Triggers for Attacking and Analyzing {NLP}}}

Teaching & Mentoring

I enjoy teaching and mentoring students, and I was involved with multiple courses at Berkeley.

-

CS288: Natural Language Processing

UC Berkeley, Spring 2023

-

CS188: Intro to AI

UC Berkeley, Summer 2023

-

Interpreting Predictions of NLP Models

EMNLP 2020

-

What a Crossword AI Reveals About Humans

-

Privacy & Security for Diffusion and LMs

-

What does GPT-3 “know” about me?

-

Neil deGrasse Tyson Podcast (Crosswords)

-

Does GPT-2 Know Your Phone Number?

-

AI models spit out photos of people and copyrighted images

-

Privacy Considerations in Language Models

-

Neural Crossword Solver Outperforms Humans For First Time

Selected Media Coverage

Here are a few articles that feature my work, including interviews with my colleagues or myself.